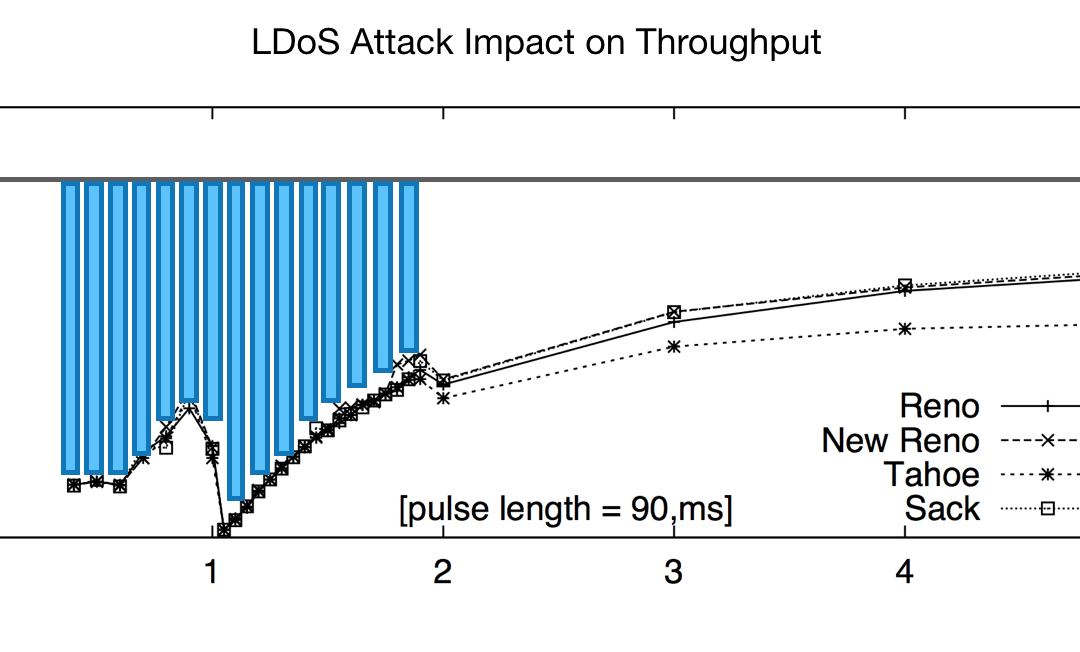

While high-rate DoS attacks use the sledge hammer approach of flooding networks with packets targeted at specific servers – low-rate DoS (LDoS) attacks rely on short, intermittent bursts of high rate traffic between periods of little or no activity that are much harder to detect and counter. LDoS attacks target TCP’s congestion control algorithms, which react to these bursts as a sign of network overload, causing servers to progressively reduce their send rates to avoid packet loss until throughput collapses. LDoS attackers time subsequent bursts to trigger additional instances of throughput collapse to prevent full recovery, slowing performance for an extended period. The graph on the left below shows a typical scenario in which a 90-millisecond burst every one to two seconds can trigger up to a 95% reduction in throughput, regardless of the TCP version. The corresponding loss of available bandwidth is shown by the vertical blue bars in the graph, and highlighted by the image on the right.

Today’s application and network environments increasingly generate jitter that has the same impact on throughput and performance as an LDoS attack. Streaming video, fast data, IoT and web applications frequently transmit data in random bursts that create jitter before traffic even enters the network. Cloud environments that often host these applications are subject to virtualization jitter from hypervisor packet delays and VM crosstalk. Last mile Wi-Fi and mobile networks used to access them frequently suffer from jitter caused by RF interference, fading and channel access conflict. In the busy high-speed, small cell, low round trip time networks planned for 5G, jitter-induced throughput collapse will become an even greater factor.

To overcome slow performance caused by jitter-induced throughput collapse, all three components of the UX (user experience) triad shown below must be addressed: (1) the behavior of applications on the origin server, whether on-premises or in the cloud; (2) the last mile wireless connections most users access them with; and (3) the network itself, which may truly be congested, due to either too much traffic, or some equipment failure. Jitter from any of these sources can trigger a throughput collapse over the entire network path between the server and the end user.

Traditional network optimization tools rely on compression, deduplication, and caching to improve performance by reducing bandwidth usage. These techniques do nothing to filter out the impact of jitter. In addition, they require access to the payload in unencrypted form, adding not only the administrative overhead and security risk of exposing sensitive encryption keys to a third-party solution, but also the performance overhead of encryption/decryption delays that become another source of jitter. These drawbacks make traditional network optimization tools largely ineffective now that over 80% of traffic is encrypted.

Only Badu Networks’ patented WarpEngine algorithmically filters out the impact of jitter regardless of the source. WarpEngine doesn’t rely on jitter buffering, because it can destroy performance for real-time video, voice and IoT applications. In addition, WarpEngine is single-ended and doesn’t need access to the payload. It delivers 2x – 10x performance boosts for all types of traffic, encrypted, unencrypted or compressed, on existing WAN, Wi-Fi and mobile networks, and future-proofs them for 5G. WarpEngine achieves these impressive results by recapturing bandwidth previously lost to jitter-induced throughput collapse for a fraction of the cost of an upgrade.

Slow performance is the new downtime, damaging employee productivity and chasing potential customers away from your website, so it can’t be ignored. See if WarpEngine can help you overcome it by trying our easy-to-use test tool on your network now.