Why it Matters, Why Your Network Can’t Always Deliver it, and How to Ensure It Does

Executive Summary

Network QoS (Quality of Service) has been defined as the ability of a network to deliver the

level of performance required for business-critical applications to meet SLAs. The lifeblood of

virtually every business is its network, and the impact of poor performance can be devastating.

Amazon calculated that a page load slowdown of just one second cost it $1.6 billion in sales

each year. In addition, Google found that slowing search response times by just four-tenths of

a second reduces the number of searches by eight million per day, leading to a corresponding

reduction in ad revenue. In an A/B test conducted by Shopzilla that compared the impact of

page load times on conversion, they discovered that faster pages delivered seven to twelve

percent more conversions than slower ones. Analyst firm Aberdeen Group found that across a

broad range of firms, a one second increase in response time reduces conversion rates by seven

percent, page views by eleven percent, and customer satisfaction rates by seventeen percent.

When faced with throughput and performance challenges, companies generally turn to costly

network upgrades to increase bandwidth. The upgrades are typically coupled with QoS

solutions designed to ensure business-critical applications meet SLAs by controlling four key

metrics: available bandwidth, packet loss, latency and jitter. Network QoS solutions do an

effective job of prioritizing traffic, preventing packet loss, and reducing latency. However, they

are struggling to overcome reduced throughput and the resulting slow performance caused by

jitter – the real-time changes in network traffic flow – that have become increasingly common

due to the nature of today’s networks and applications, and how they are deployed and used.

Network upgrades provide no solution, as the incidence of jitter-induced throughput collapse

often increases when more bandwidth is available.

The root of the problem lies in the fact that TCP, the most widely used network protocol, misinterprets jitter as congestion caused by either too many packets flooding the available bandwidth, or a hardware failure somewhere on the path. In response, TCP reduces throughput, slowing down network traffic in an effort to prevent data loss even when plenty of bandwidth is available and all network equipment is fully operational.

Increasing Sources of Jitter

Today’s streaming services, IoT devices, voice, video

and web applications typically transmit data in

unpredictable bursts. This means jitter often originates

on the servers hosting these applications, before their

traffic even enters the network. When applications

run in a virtualized environment like AWS, scheduling

conflicts between VMs, and packet transfer delays

caused by hypervisors managing them add further

sources of jitter.

The popularity of cloud services, which Gartner estimates will grow from 17% of all IT spending

in 2017, to 28% by 2021, guarantees that virtualization’s contribution to jitter will grow

correspondingly. In addition, many organizations have adopted cloud-first strategies for

deploying new applications. This means virtualization jitter and its attendant throughput

collapse will become a major factor in the success or failure of many application rollouts.

Jitter from physical and virtual server environments is compounded by the volatile nature of

Wi-Fi and mobile networks that frequently suffer from RF interference, fading and channel

access conflict. An estimated 70% of internet traffic is now consumed over a wireless

connection. This already high percentage is expected to grow substantially over the next few

years with the burgeoning use of cloud services, and the rollout of billions of IoT-enabled

devices that already make heavy use of Wi-Fi and LTE.

According to Gartner, the number of IoT devices will grow from 6.6 billion in 2016 to 20.8 billion

by 2020. Given that many IoT devices utilize Wi-Fi or LTE services, primarily to upload data to

the cloud, the combined impact of IoT, wireless and virtualization jitter on tomorrow’s

networks will be significant.

In an increasingly real-time, virtualized and wireless world, guaranteeing QoS is impossible

without a solution that effectively addresses TCP’s reaction to jitter. To understand what is

required to eliminate the negative business impacts of slow network performance, it’s

important to first look at the capabilities of the solutions that support network QoS.

QoS Solution Overview

MPLS

In a traditional IP network, each router makes an independent forwarding decision for each packet by performing a routing table lookup based on the packet’s network-layer header, source and destination IP address. The process is repeated by each router at each hop along the way until the packet eventually reaches its destination. These routing table lookups and independent forwarding decisions add overhead and unpredictability that make it impossible to meet SLAs for mission critical applications. In dedicated WAN links between branch offices and on- premises data centers that traditionally host enterprise applications, QoS has been addressed with Multiprotocol Label Switching (MPLS), introduced in the late 1990s. With MPLS, bandwidth is reserved and network traffic is prioritized by forcing it over a predetermined path to its destination based on labels assigned to each packet as it enters the network, rather than leaving it to each router along the way to decide the next hop.

The growing popularity of SaaS applications and other cloud services has made dedicated MPLS

networks that route internet traffic through an on-premises data center too costly and painful

for many organizations. This cloud-bound traffic increasingly eats into available bandwidth,

degrading performance for their in-house applications. In addition, once packets leave the on-

premises data center, MPLS labels have no impact. This coexistence of on-premises and cloud

applications has given rise to the concept of the hybrid WAN. A typical hybrid WAN features

an MPLS pipe that connects a branch location to an on-premises data center for in-house

system access, and a broadband connection that enables direct internet access to the cloud.

SD-WAN – QoS for the Hybrid WAN

In this hybrid environment, SD-WAN solutions have entered the

QoS arena. SD-WAN software makes it possible to bond

multiple WAN connections – broadband internet, private

dedicated MPLS, LTE, or any other transport pipe – and

continuously monitor performance across all of them, routing

the highest priority traffic over the best performing connection

at any point in time. The key features that make SD-WAN a compelling QoS solution for today’s

environment include: point-and-click prioritization at the business application level, rather than

the network configuration level as is the case with MPLS; dynamic path selection that moves

application traffic between network paths on the fly based on current performance; and built-in

resilience, as all lines are used in an active/active state. If one line goes down, traffic continues

over the remaining lines without waiting for a failover process to complete.

Why SD-WAN Can’t Guarantee QoS

SD-WAN vendors claim their solutions not only measure packet loss, latency and jitter, but also compensate for them to ensure QoS. However, there are three fundamental problems with this claim. The first problem is that SD-WAN is an edge technology. It can make decisions based on measurements at the edge, but it has no control over what path a packet takes once it leaves the premises and enters the cloud. Even most SD-WAN vendors recommend keeping an MPLS link in parallel to the broadband link to ensure QoS for real-time traffic like voice and video. Secondly, compensating for latency due to distance isn’t possible using any technology, since data can’t be transferred faster than the speed of light. What vendors really mean is they can move traffic to a less congested path on the fly, and use WAN optimization techniques to speed traffic by reducing bandwidth usage, or multiplex a single connection over multiple paths. Finally, to compensate for jitter, SD-WAN solutions try to evenly space packets by providing a “jitter buffer” to realign packet timing for consistency. This may work for some applications, but it creates delays and destroys performance for real-time applications like streaming video and IoT that send data in irregular bursts.

WAN Optimization

WAN optimization vendors, many of whom also have SD-WAN offerings, employ techniques

such as data de-duplication, compression, and caching. These approaches yield some benefit in

accelerating traffic by reducing its volume, but they can’t do much for data that’s already

compressed or encrypted, and they require access to the payload. In addition, now that up to

80% of all internet traffic is encrypted according to Google, the need for payload access

introduces the added overhead of encryption/decryption at each endpoint, as well as the risk of

exposing sensitive security key information to third party vendors and their tools.

WAN optimization vendors attempt to address jitter primarily by

managing the size of TCP’s congestion window (CWND) to let more

traffic through a connection, but this ultimately doesn’t stop jitter-

induced throughput collapse, or the ensuing slow recovery process.

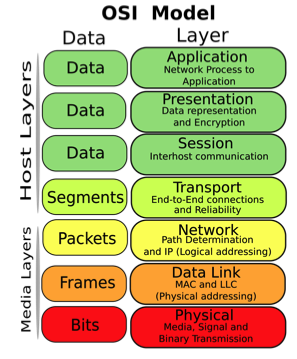

From the perspective of the OSI model, QoS solutions focus primarily on layer 7 – the application layer where SD-WAN software and WAN optimization solutions operate, and layers 2 and 3, the network and data link layers respectively where MPLS labelled packets interact with routers and switches. Network administrators often try to address QoS problems resulting from jitter at layer 1 (the physical layer) by upgrading bandwidth, only to see it deteriorate further in many cases, as the incidence of jitter-induced throughput collapse often increases with more bandwidth. However, these solutions can’t fully guarantee QoS due to their inability to deal effectively with jitter – a layer 4 (transport) issue that most vendors only partially address.

Overcoming Jitter-Induced Throughput Collapse – The First Step in Insuring QoS

TCP’s original design assumption of orderly packet delivery in relatively consistent time

intervals made sense when it was introduced over 40 years ago, and still has validity when

networks truly become saturated. However, today’s streaming applications often generate

traffic characterized by short, unpredictable bursts of data that cause significant variation in

Round-trip Time (RTT).

TCP’s two congestion management schemes; retransmission and congestion window (CWND) management are designed to handle reliability while avoiding packet loss. Between the two, retransmission is by far the more dominant. With each retransmission attempt the Retransmission Timeout (RTO) value is increased, and CWND is reduced on the assumption that the network has become congested. After three RTO’s throughput is halved. After seven RTOs throughput collapses because TCP treats the packets as lost rather than merely delayed, and prevents traffic from being sent to guard against further data loss. In effect, TCP becomes the bottleneck.

Badu Networks’ patented WarpTCP™ technology offers the only solution focused squarely on the TCP bottleneck issue for both wired and wireless networks. WarpTCP analyzes traffic to determine if congestion is real, and prevents TCP from unnecessarily reducing throughput in response to jitter. WarpTCP’s proprietary algorithms estimate actual bandwidth available to each TCP session in real-time, filtering out transient fluctuations in RTT and packet loss. WarpTCP is specifically designed to deal with rapidly changing bandwidth, loss patterns, server loads, and RTT variance, enabling it to do well in volatile environments like mobile and Wi-Fi networks. As a result, throughput and performance stay at consistently high levels, even in the face of extreme fluctuations. WarpTCP improves both download and upload throughput by as much as 10x in wireless environments, even when the user ventures away from the Wi-Fi AP or mobile eNB, and the connection is subject to greater RF interference and channel access conflict.

WarpTCP Architecture

WarpTCP consists of two components that work hand in hand to prevent TCP throughput

collapse and optimize the use of all available bandwidth to maximize performance:

- A TCP de-bottleneck module that implements WarpTCP’s proprietary algorithms that determine in real time if jitter is due to congestion, and prevent TCP from reducing the size of CWND when it’s not

- A transparent TCP Proxy that implements TCP session splicing by splitting the connection between the server and the client into two independent sessions. Each spliced server-to-client TCP session is replaced by a server-to-proxy sub-session and a proxy-to-client sub-session. The two sub-sessions are independent TCP sessions with independent control. This is also a key differentiator because it completely breaks the dependency between sending server and receiving client, enabling WarpTCP to implement its own flow control algorithms based on speed and capacity matching that are far superior to TCP’s.

Another important advantage provided by WarpTCP’s architecture is dramatic improvement in the most visible aspect of the user experience with a direct impact on the bottom line – page load times. Browsers only support establishment of two to four TCP sessions simultaneously, whereas a web page can easily have over 100 objects, each requiring its own TCP session to send and receive data. With WarpTCP’s session splicing and flow control, many more sessions can be handled in parallel, since connections with the browser are independent of those with the server. As a result, pages typically load 2-3x faster.

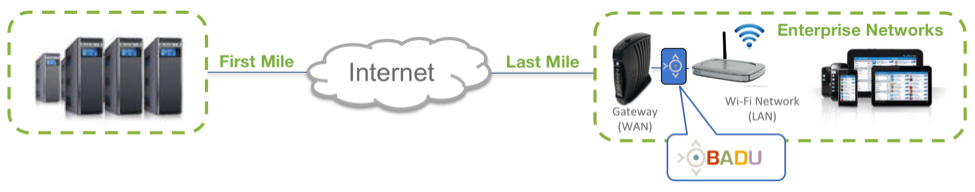

Flexible Single Instance Deployment

WarpTCP requires no changes to clients or servers. It can be installed at any single point on the network close to the source of jitter. In contrast, most packet filter TCP optimization solutions require their proxies to be placed near the server, or two proxies to deployed – one at each end of the path between the server and the client. In addition, WarpTCP can be deployed as a software module or hardware appliance next to an application server in an on-premises data center, between a WAN gateway and Wi-Fi access point, or at a cell tower base station. WarpTCP can also be deployed as a VM instance in a cloud environment. In addition, WarpTCP requires no access to the payload. It’s completely agnostic to the type of content, or whether it’s encrypted or unencrypted.

Conclusion

Network QoS can only be insured if bandwidth usage, packet loss, latency and jitter can be

controlled to consistently meet application SLAs. The MPLS, SD-WAN and WAN optimization

solutions that traditionally support QoS operate primarily in the application, network and data

link layers of the OSI network stack. They do an effective job of prioritizing traffic, preventing

packet loss, and reducing latency, other than that caused by distance, so they have an

important role to play. However, they are struggling to guarantee QoS due to the increasingly

jitter-prone nature of today’s application traffic, and the fact that the bulk of it travels at least

partially over volatile wireless links. The rapid adoption of cloud services, and the proliferation

of IoT-enabled devices will only further intensify the impact of jitter and increase the incidence

of jitter-induced throughput collapse in the years ahead.

Only Badu Networks’ patented WarpTCP technology deals directly with the problem of jitter-induced throughput collapse on both wired and wireless networks. WarpTCP’s ability to accurately determine in real-time whether congestion exists for each TCP session, and prevent the transport layer’s congestion control from slowing traffic when plenty of bandwidth is available, addresses jitter- induced throughput collapse head-on. Implementing WarpTCP’s approach to managing transport layer congestion control to eliminate jitter-induced throughput collapse, in conjunction with traditional QoS solutions that tackle the non-jitter aspects of throughput and performance, provides QoS insurance for an increasingly jittery future.